Privacy & Security Guardrails for AI: Practical Layers for Real-World Protection

.png)

Key Takeaways

1. Introduction — When Privacy Becomes the Price of Progress

In today's hyper-connected world, privacy is not just a silent expectation but a fragile privilege. When we send email, train AI models and use smart devices, bits of our personal information are being collected, analyzed, and stored somewhere — out of our sight. Our lives have become digital ecosystems — rich with information, but largely vulnerable by design.

Artificial intelligence has also amplified the powers and the dangers of this information age. Privacy used to mean thinking about the threat to your credit cards and browser cookies. AI systems, on the other hand, process everything from medical histories and voice patterns to biometric scans and private conversations. They don't just see us; they learn us. This new level of intimacy presents unique benefits — great conveniences — and unimagined risks.

The promise of personalized and advancing technologies through AI comes with a hidden cost: the cost in privacy. The algorithms that offer to predict the music for your favorite playlists also predict your emotions, track your location, and outline your identity — without your consent. As AI systems evolve, the information amassed makes data the most valuable coin in the world and the most important challenge facing mankind in their protection.

Hence, we have privacy guardrails and "security guardrails." We think of them as the digital safety rails that keep technology ethical, transparent and accountable. They do not inhibit AI, they guide AI. Guardrails define how data should be collected, what should happen to data when it is processed and how it should be made available — properly designed, they combine AI guardrails, which are the invisible public harness of trust, to balance what machines are able to do with what they should do.

By the end of this piece, you’ll have a complete understanding of how:

⟶ privacy guardrails protect individuals from personal and identity exposure.

⟶ security guardrails safeguard sensitive financial, health, and biometric data.

⟶ AI guardrails ensure responsible, transparent, and ethical use of data within intelligent systems.

We’ll explore real-world cases of data leaks, dissect why existing systems fail, and uncover the frameworks that leading organizations are adopting to build trust at scale.

2. The Unseen Storm: How AI Turned Privacy Into an Open Secret

The loss of privacy did not happen in the digital age overnight. It eroded quietly, one algorithm at a time. Privacy was lost in stages in the same manner that the quest to make technology smarter was subverted into an industry thirst for personal data. It wasn't enough to make tech more developed. The continued hunger for personal knowledge brought every click, and every query and frame of a camera into the potential data base, contributing each time to the thoughts or "learning" that were expansive in the machine. At this time, AI is not only processing information but absorbing it into itself, learning from it, storing traces of knowledge far longer perhaps than intended.

The world has entered another phase in its connectedness, one that may account for the fact that privacy now disappears wherever systems are so structured. In absence of heavy guardrails of "privacy" and "security" the linearity between giving consent and outright surveillance becomes dangerously thin.

2.1 The Endless Appetite for Data and the Need for AI Guardrails

AI systems feed on data, the more they are given the more learned they become. From forecasting customer behavior to correct diagnosis of diseases, every increase in the quality of machine learning is dependent on the proper "amount" of data in which info is stored.

But it wears a tediously dark aspect. Many organizations collect far more data than they have need for and store masses of it "just in case". This hoarding mentality of the most technical aspect of the whole system not only creates what is now called technical debt, but adds other aspects of ethical risk. There are, without guardrails of "AI" defined as to what exact form of data is necessary and how it is to be used, boundaries which can easily be infringed, collecting personal or sensitive information from users which never gave their explicit consent to handling of.

As models become more complex, it’s no longer enough to ask, “Can AI learn this?” The real question is, “Should it?”

2.2 The Myth of Anonymization and Weak privacy guardrails

For several years now, companies have maintained that anonymity of data is safeguarding user privacy. In fact, as to re-word it more bluntly, anonymity prevents hardly more than anonymous people. Modern techniques of re-identification have come to be worked out to evolve through AI correlation of many things to identify people from apparently harmless data sets.

Imagine for instance a man's shopping history coupled with GPS detail of their movements and there are browsing patterns. At once anonymity is gone. Weak or even absent guardrails of "privacy" have allowed these methods to be continued, and users that are exposed to them, are unconscious as its exposure.

2.3 Shadow Data: The Invisible Threat to security guardrails

Not all forms of data are given by hackers. Some are collected due to neglect. “Shane data” has become an organic phase of many industries and now is regarded as one of the most emphasized forms of deficit at all in the sphere of AI data security.

The “Shadow Data” component typifies that kind of material which still exists but are now in neglected places, old backup drives [ tape?], converted storage drives, test methods of storage for which deletion has always been evaded.

These tracks which are unexamined are placed in untracked databases which hop over really any guardrails of security protection. As they are invisible to official forms of systems of storage devices, they tend to possess outdated, unredacted or unassured aspects of personal data. The dysfunction presents an sloughing unseen risk, but an unmonitored one, which hackers delight in, and which regulators invite penalties on.

The solution for the elimination of "shadow data" requires a preservation of continual completeness of department continual governance [a plus tact], which are indeed said to be the two life lines, or critical dimensions, or the additions of contingency which all modern systems of AI guardrails are demanded.

2.4 Privacy by Design: From Buzzword to Baseline for AI Guardrails

"Privacy by design" has become a common refrain in tech circles, but few organizations accomplish it. The idea is to incorporate the principles of privacy -- consent, minimalism, transparency -- into the structure of a system from the very beginning, rather than as an after-thought.

When privacy is tacked on after the fact, it becomes a compliance exercise. But when it is built in at the outset, it becomes a competitive advantage. Strong AI guardrails operationalize this principle, ensuring that every step in the life cycle of a model -- from training and deployment to feedback and storage -- is governed by data ethics and safety.

The future belongs to builders who regard privacy guardrails not as legal mandates, but as product features. Companies that adopt this view will not only avoid regulation, but will also win loyalty, trust and long-term viability.

3. The Chain Reaction: When One Breach Triggers Another

Leaking data is not an isolated event, it is the beginning of a long chain of events. One weak link in privacy protection, one oversight in the simple matters of leaving a server unguarded, or giving faulty configuration to APIs, or encrypted data bases, brings about a great chain of exposures through systems, departments and industries. As the results of one such oversight, it is no great matter for fraud to be netted. No great matter for one to become a victim to the charges of identity thieves, or to find their personal data greatly manipulated.

Because of the complexities of the connected systems of AI of to-day, a leak in the privacy of one of these sources does not stop with the singleness of that source of exposure. With no strong privacy guardrails, a singleness of weakness may be multiplied through networks, producing a breach of privacy, affecting users, institutions, public and business.

3.1 The Domino Effect of Data Exposure Without privacy guardrails

The digital ecosystem acts like a spider’s web — each node radiating to millions of other nodes. If one line in the web is severed, pressure goes out everywhere. A small leak of data can reveal personal identifiers that later can be used to penetrate banking systems, health systems, or biometric records of individuals.

Many breaches remain undetected for months because there aren’t effective privacy "guardrails" preventing odd data flows or unauthorized access. After hackers have gotten one set of data, it is used to abuse others — a classic domino effect, the first piece of leaked data being the key to unlocking other data sets.

The bursting of the dam is not the result of the hacker’s brilliance, but the absence of layer upon layer of guardrails, which would have effectively stopped the bursting of the dam when it could have been promptly sealed.

3.2 AI’s Hidden Memory: Training Data Leakage and Missing AI Guardrails

Although the strength of AI systems comes from learning very large data sets, there can also be a weakness. When models are trained on extensive, sensitive data that has not been filtered, they can essentially “memorize” specific information from the data cells. This practice is called training data leakage. Then when outputs are created in the future, private information may inadvertently be revealed.

Additionally, without sufficient AI guardrails in place, even anonymized data can surface again. Certain models have been shown to remember names, contacts, or phrases from its information set. Therefore what could seem like an intelligent reply could actually be a portion from a private data set.

3.3 The Internal Blind Spot: Why security guardrails Fail from Within

Although most organizations worry about outside threats, the worst breaches usually originate from inside organizations. Employees with privileged access, incorrect permission setups, and old systems ignorant of existing security guardrails are likely to circumvent such guardrails.

Take a data scientist, for example, who is testing a model from using real customer data, or a developer storing the production keys in a public place: the failure to recognize security measures applicable to their work is not malicious intent. It is merely negligence. However, it raises questions about the fragile nature of internal governance when AI guardrails are relegated to policies instead of implemented processes.

The answer is automation and accountability. The effective security guardrails of new offer systems should be made self-enforcing—capable of identifying anomalies as well as risks and confiscation of access even regardless of human error.

3.4 Trust Erosion: The Unseen Aftermath of Weak privacy guardrails

When they happen too frequently, it’s not the data that’s fragile — it’s trust. Users start to wonder whether they can really trust the organization to protect their privacy, regulators strengthen compliance laws, and investors lose confidence in the organization’s leadership.

Weak privacy guardrails create immediate exposure, but also cause long-term reputation paralysis that is so difficult to recover from, it may take years. In this era of AI-driven interactive communications, where personal data is at the core of personalization and predictions, trust becomes the key currency.

4. When Trust Breaks: Real-World Privacy Disasters

Innovation isn’t the only thing history teaches us. It also remembers the lessons learned from innovation’s failures. Every major technology advancement has carried with it a lesson about accountability. In the case of AI and data-driven systems, the lessons have come mostly in the form of colossal privacy breaches, billion-dollar fines and the loss of the user's trust.

Despite the emerging awareness of "privacy guardrails" and "security guardrails", many organizations still consider data protection an afterthought. The result is the production of privacy disasters over and over which do not only mean breaches of security, but lead to diminished general confidence in the technology as a whole. These case studies suggest a typical pattern — terrific systems, conceived and produced without guardrails, in the fullness of time become victims of their in-built risk.

4.1 ChatGPT Data Leak (2023): A Lesson in Missing AI Guardrails

This month, because of a software glitch, OpenAI’s ChatGPT experienced a temporary outage causing a subset of users to see private chat histories and billing information. It was a problem that was promptly fixed and might serve as a potent reminder that sophisticated AI does not escape from potential data exposure. This gives rise to some critical takeaways:

⟶ All AI models, no matter how sophisticated, require an appropriate amount of AI guardrails to surveil data retention and exposure.

⟶ Transparency and prompt remediation are required in order to convince users to trust the applications.

⟶ Data exposure must always be controlled under the umbrella of the principle of least privilege such that users see only data that they are entitled to have.

The incident should serve as a wake-up call to the community of AI modelists that capability and privacy must grow on parallel railroad tracks.

4.2 LoanDepot & Evolve Bank Breaches: When security guardrails Fail

The year 2024 was not kind to America’s financial services sector, experiencing one of its more substantial breaches in modern history. Major mortgage lender LoanDepot was breached, exposed personal and financial data of 16.9 million customers, including names, birth dates, account numbers, addresses and phone numbers.

Evolve Bank & Trust, in a similar vein, had 7.6 million individuals' data also exposed – having lost data, such sensitive identifiers as Social Security numbers, account numbers and contact information.

The impact of these incidents reached far beyond these two institutions. Evolve Bank, after all, provides a banking service to multiple fintechs such as Affirm, Wise and Bilt Rewards, and the data was quite exposed, across myriad consumer-facing apps.

This is what made these breaches possible, were the alleged missing security ‘guardrails’ around governance of data, access control and boundaries between organizations — as opposed to novel new hacking techniques. Even systems, where the separation appears to exist, if guardrails are lacking in one link of the chain, it may ultimately lead to instability throughout it.

Ultimately, the LoanDepot/Evolve bank incident reveals itself as more a lesson, on our default juxtapositions, ultimately indicative of a wayward society focusing it’s values on ill-considered metrics, not perhaps evaluative of each and every application by those we as audiences are privy to.

4.3 Meta’s GDPR Fines: privacy guardrails Ignored at Scale

Meta's continued violations of the General Data Protection Regulation (“GDPR”) by the E.U. shows that even the largest technology platform organizations in the world can exhibit non-compliance if “privacy guardrails” are not considered to be important. In the year 2023, Meta was fined 1.2 billion euros for failing to properly guard the transfer of user data from the E.U. to the U.S.A.

Following are the important takeaways from this case:

⟶ Size does not excuse a failure for compliance with laws akin to a non-compliance equation — the larger the platform, the stronger the safety “AI guardrails” that are required.

⟶ Cross border data flows require “safety guardrails” which will enforce regional privacy laws without the needs of regulators input.

⟶ The regulatory bodies are changing from a non-proactive approach of paying fines after the fact for non-compliance to a proactive one of imposing regular audits.

The Meta case decided that failure to implement governmental oversight of governance in the privacy context is not a “risk” any longer but a liability.

4.4 Health Record Exposures: The Absence of Data privacy guardrails

The healthcare sector — where confidentiality is literally life or death — has witnessed some of the most horrifying breaches of privacy. Enormous amounts of health data have leaked from hospitals and healthtech start-ups due to insufficient privacy guardrails and inadequate security practices for “AI data”.

Here are a few examples:

⟶ Medical images and records freely available, but stored on unsecured cloud servers.

⟶ AI diagnostic systems trained on sensitive data without any anonymization of the sensitive data.

⟶ Health apps which sell patient data to advertisers under “research” clauses.

5. Guardrails Demystified: The Architecture of Digital Safety

Following the observation that data breaches displace trust and endanger whole ecosystems, the next obvious question is: How do we stop them? The answer is to create systems that are safe by design and not just regulation-compliant.

privacy guardrails and security guardrails make up the invisible structure that determines how data is collected, processed and disseminated. They can be thought of as the ethical and operational equivalent of the code that keeps AI from crossing the territory between intelligence and intrusion. If built properly, AI guardrails are not roadblocks, they are the architectural backbone of digital security.

5.1 What Are Privacy and security guardrails?

Essentially, privacy guardrails and security guardrails refer to the ethical and technical demarcation points in digital and AI infrastructures.

They are like multi-faceted security mechanisms built to:

⟶ Restrict excessive data collection and storage.

⟶ Frustrate unauthorized access or sharing.

⟶ Uphold transparency about AI models’ use of personal data.

⟶ Detect and mitigate threats in real-time.

The only difference between a secure AI system and a vulnerable one is likely to be whether or not those guardrails are built into its DNA or bolted onto it.

Without them, those AI guardrails are reactive rather than proactive in preventing difficulties, responding to them. When they are in place, however, at the beginning, compliance is more likened to culture, not an afterthought. The beginning of design becomes, therefore, the desire for safer data safeguard that then acknowledges that design. Not the last item in the checklist.

5.2 The Five-Layer Guardrail Framework for AI data security

The creation of a sound, dummy-proof AI system requires a layered approach, with each layer protecting the next in a nested manner like a set of armor. This five-layer design is the soil for any effective modern-day privacy guardrails and security guardrails used in businesses everywhere.

Layer 1: Data Minimization and Anonymization

Gather as little as is necessary. Each additional datum that is gathered means an additional risk. The best guardrails are minimalist, which means that any identifying marks must be made anonymous, and any surrounding data deleted from the record when no longer useful.

Layer 2: Differential Privacy

This is a statistics method of enclosing “noise” around data so that an AI can learn general trends in the information without any specific information being imparted. This is one of the most important kinds of AI guardrails because it provides a balance between studying them and keeping the information secret.

Layer 3: Encryption and Access Control

This is where the cyber security guardrails meet the AI hardware. Encryption denotes that the data has to be unintelligible to anyone who has no authority to use it, while it also works on a basis of the type of data-communications or information contained in it-who has the permission to peruse the more secretive information (i.e. consumers.).

Layer 4: Safety Nets of Models

These guardrails are implanted directly in the AI models themselves, so that nothing is retained in memory, nor shall any data be run the risk of leaking out. They require a code of “AI ethics and safeties,” so that the algorithms understand that there are things taught to them which are outside their ken-what it is permissible to remember and what it is permissible to forget.

Layer 5: Continuous Monitoring and Governance

Guardrails are never “static.” These monitoring tools have to study constantly for abnormal conditions, audit the various flows of data and ascertain compliance. These governance systems require rapid evolution to meet any modern-day threats, they are often run by the AI systems. The rapid evolution of these is essential, it being desired to have a living and continually dominant feedback loop of “AI data security.”

This five-layer structure has this advantage, that in the event of a hole in one guard, the succeeding one will catch any errors, thus forming a fail-safe chain of confidence.

5.3 How AI guardrails Strengthen Modern Data Architecture

The creation of a robust AI that is difficult to misuse requires a layered approach: Just as in shoes, each layer shields the next in an enclosing chain-mail fashion. This five-layered design is the soil for all modern-day privacy guardrails and security guardrails departments in place everywhere in business.

Guardrails are a part of AI — they are the foundation on which AI stands. When they are properly implemented, they enhance performance and foster the resilience of the system at each state of the system lifecycle.

The following is how effective AI guardrails change the digital architecture:

⟶ Data Lifecycle Control: The flow of data becomes handled from consumption to elimination (e.g., too many credit-card numbers are stored for security reasons). Sensitive data are not permitted to be retained in storage unnecessarily who — knows where.

⟶ Automated Compliance: Compliance with the rules of governing authorities such as HIPAA or GAPP is accomplished by putting such compliance in place as code enforced by smart rules engines for its enforced compliance. This means that compliance is self-regulating instead of regulatory.

⟶ Cross-Functional Visibility: Departments such as privacy and security cooperate, bestowed with unique dashboards through AI data security means to reduce silo risks of being taboo to others.

⟶ Rebuilding User Trust: Higher public trust is developed: The most important currency of an AI-driven world is trust. Strategies designed to be auditable will be transparently published.

The factor that makes for great power in this model is that its flexibility is phenomenal. Whether borderline be in healthcare, financial applications, or sociability, adaptively they are the guardrails of the mannerisms of data. They're not built by the guardrails to juncture away innovation by the guardrails to commence it.

When present are operating within ethical, scored boundaries, then ethics and safety in AI stop being a checklist and become the bases of intelligent innovation, need or niche.

6. The Risk Spectrum: From PII to Biometric Data

Not every type of data has the same weight — not every type has the same weight of danger. Some types of data, such as aggregate statistics or anonymous trends, carry little danger if they are made known. Other types of data, however, such as medical records or biometric identifiers, may mean a great deal in case they are known.

To the extent that AI systems go in the direction of personalization, they intrude into high-danger categories of data, with the resultant enhanced necessity for effective "privacy guardrails," security guardrails" and "AI guardrails," as they relate to the sensitivity of the material they protect.

6.1 Personally Identifiable Information (PII) and privacy guardrails

Personally Identifiable Information (PII)—names and addresses, phone numbers, governmental IDs—forms the very identity of the individual in today’s digital ecosystem. Once this PII is compromised, it is used to impersonate others, to conduct fraudulent transactions or phishing.

The issue is not only that this PII is valuable but that it is ubiquitous. Social media accounts or online purchases leave traces of this information everywhere. Without appropriate privacy guardrails, they become snippets that can be arranged easily into a larger picture.

In order to adequately protect PII, we must mandate the following of our AI systems:

⟶ Data minimization, that is only collect data which is necessary in order to perform a task.

⟶ Automated destruction of data after its intended purpose.

⟶ Encryption and access controls which minimize exposure, even in trusted environments.

PII itself is not merely a technical task, but a moral obligation. In an AI-driven world, privacy must be the first and most fundamental right that we preserve.

6.2 Financial Data Leakage and security guardrails in Action

Few things tempt criminals more than financial information. Account numbers, credit card numbers, transactions are literally a digital goldmine. A single leak can snowball into enormous fraud and institutional collapse found in Equifax.

What’s the worst threat? I believe the greatest threat is human stupidity operating in a legacy environment. Financial institutions continue to rely on manual supervision of unwieldy processes and lack of advanced security guardrails which can detect abnormal activity and transactions in real time.

True AI data security in finance consists of:

⟶ Predictive AI processes that recognize and isolate deviations to norm or abnormal spending.

⟶ Policies for dynamic encryption standards that grow with maturity of attack models.

⟶ Strong internal auditing processes that act in a guard role to prevent use by inside individuals.

Finance is not merely transactions, said its creator, but about the trust people place in them. And without adaptive security architecture of those faiths, that trust evaporates quicker than virtually any currency.

6.3 Health Data & HIPAA Violations: Guardrails for Medical AI

Healthcare data exists in a place where privacy and humanity meet. Its information includes medical histories, genetic makeup, prescriptions, and mental health records — all of which are highly sensitive subjects protected by laws like HIPAA.

However, the rapid emergence of medical AI tools is beginning to blur the line between invention and intrusion. Training diagnostic models on real patient data without sufficient AI guardrails will result in “accidental” out of use or slanted answers in analyses.

Strong privacy guardrails and security guardrails built into these health-care AI systems provide:

⟶ De-identification protocols: Data is cleaned of personal markers before model training.

⟶ Consent-driven sharing: Patients know how their data is being used.

⟶ Real-time monitoring: Monitoring alerts when unauthorized access occurs and abnormal data extraction location occurs.

6.4 Training Data Memorization and the Importance of AI Guardrails

AI’s capacity to “learn” from massive datasets is its greatest strength, but also one of its greatest weaknesses. In the training of large language models and neural networks, specific data entries, such as user queries or internal documents, are memorized, and incidentally reproduced later.

This undetected weakness has made memorization of training data one of the most troublesome issues in modern “AI data security.”

Good AI guardrails are designed to counteract this by:

⟶ Pre-filtering sensitive data before being ingested by the model

⟶ Using differential privacy techniques to obscure identities

⟶ Implementing output moderation levels to prevent inadvertent recall of data.

Without these checks, artificial intelligence systems risk breaching confidentiality by design. An unguarded AI has the distinct possibility of becoming its own breach vector, intelligent, but indiscreet.

6.5 Biometric Data Protection Through security guardrails

Biometric data, whether it is fingerprints, facial recognition, voiceprints, or gait recognition, is the most personal datum ever recorded. It is not something that can be reset, like a password, or replaced. Once compromised, an individual’s identity may be in constant or irreversible danger.

As biometric technologies proliferate in airports, offices, and consumer devices, the most vital problem will be how to balance convenience with control. security guardrails and “cyber guardrails” must work now on the molecular level of precision.

The guiding principles would include:

⟶ Template safeguarding: Store encrypted biometric templates, not data.

⟶ Decentralization: Process them locally (as in the case of Apple’s facial recognition).

⟶ Zero trust-architecture: Never believe that they’re safe, always check for truth.

Safeguarding biometric data is another domain in which AI ethics and safety comes into conflict with human rights, the line between innovation and violation becoming very thin.

As shown above, every type of datum has different challenges. There is no such thing as a one-size-fits-all solution. “AI guardrails,” therefore, have to be dynamic, risk aware and intricately incorporated into each separate use case. The more valuable the data, the more potent the guarding layers must be.

In the next section, we will see the principles just mentioned in operation, considering how such leaders as Google, OpenAI, and Microsoft are inserting privacy guardrails and security guardrails into their ecosystems in order to restore trust on a large scale.

7. Guardrails in Action: How Industry Leaders Are Doing It Right

While policies define intent, it is implementation that defines integrity. The most successful organizations on the planet have learned that privacy cannot be dealt with through compliance documents alone; it needs to be built into the very foundation of technology.

From Silicon Valley companies to healthcare startups, they are embedding “privacy guardrails,” “security guardrails,” and AI guardrails so that user trust, data protection and ethical innovation can exist together. These companies have proved that responsible AI is not a roadblock to progress; it is the blueprint for sustainable growth.

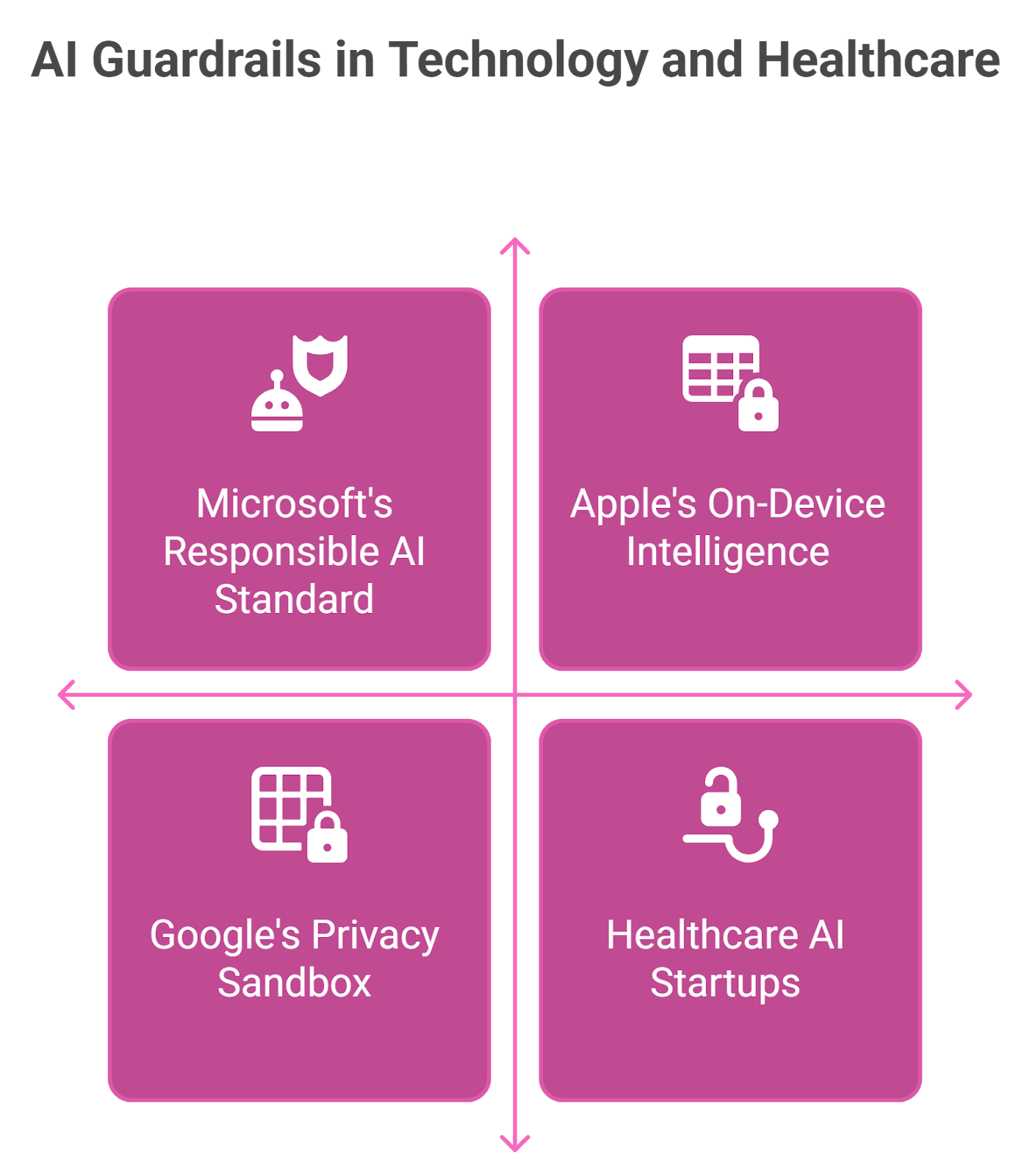

7.1 Google’s Privacy Sandbox: A Blueprint for privacy guardrails

In 2020, Google presented its Privacy Sandbox initiative, which seeks to end third-party cookies and change the face of online advertising — without compromising users’ privacy.

What this means is a changeover from re-active privacy compliance to pro-active privacy guardrails being built into the Web ecosystem itself.

Instead of collecting raw user data, Sandbox allows browsers to analyze this information in store and to share “aggregated” intelligence with advertisers.

Why this is important:

⟶ Provides users with protection against intrusive tracking.

⟶ Protects transparency through open source frameworks.

⟶ Shows that personalization and privacy can exist together under solid AI guardrails.

Google’s approach sets ethics in advertising at a new starting point — that privacy first design can be the engine driving a whole global ad economy.

7.2 OpenAI’s Redaction and Reinforcement Learning with Human Feedback (RLHF)

OpenAI, one of the most important AI companies globally, incorporates various levels of AI guardrails, especially through redaction and RLHF (Reinforcement Learning from Human Feedback).

These are used to ensure redaction of sensitive and personally detectable data before, during and after model training. RLHF enables human reviewers to ensure optimization of responses prior to their receiving by end users so that others receive results within the confines of safety, fairness and ethical standards.

Key developments are:

⟶ Redaction layers to prevent recall and reproduction of sensitive data.

⟶ Continued human exposure to serve the reinforcement of “AI ethics and safety.”

⟶ Transparent model updates to ensure accountability and continuous responsiveness in decision-making.

7.3 Microsoft’s Responsible AI Standard: Scaling security guardrails Globally

Microsoft’s Responsible AI Standard is one of the most thorough frameworks for enterprise-grade AI governance.

It has concrete principles for fairness, inclusivity, transparency, and reliability, all enforced by a rigorous network of “security guardrails.”

The standard functions by multilayered internal checks so that all AI systems are ethical and conform to technical standards prior to deployment.

Highlights include:

1. Required data risk assessments prior to training AI systems.

2. AI “Impact Assessments” that scope ethical implications at each step.

3. Centralized monitoring through safe governance dashboards.

Microsoft’s model demonstrates how hedge security guardrails can be codified at scale, creating room for innovation without sacrificing accountability.

7.4 Apple’s On-Device Intelligence: Embedded privacy guardrails at Work

Apple has historically set itself apart through a strong pro-privacy focus, and its edge device intelligence is among the most relevant exemplifications of privacy guardrails in action.

Rather than sending data to the cloud for analysis, Apple derives sensitive user information, such as facial recognition, typing patterns, or voice calling through direct device processing.

This decentralized model dramatically reduces exposure risk and minimizes dependence on outside servers.

Benefits include:

⟶ AI data security: sensitive data does not leave user devices.

⟶ Resilience through decentralization: breaches have a vastly limited effect.

⟶ User empowerment: Privacy becomes a tangible feature, not a hidden policy.

7.5 Healthcare AI Startups: Privacy and security guardrails in Healthcare Innovation

In the healthcare sector, the concept of AI guardrails has now become the means by which newness is put at risk of leading to liability.

Startups providing diagnostic algorithms, predictive models, and virtual health platforms (where applied) face the dual challenge of ensuring their effectiveness clinically while ensuring patient confidentiality.

Sophisticated healthtech companies are now accepting this challenge through the incorporation of privacy guardrails and security guardrails directly into their AI workflows.

Some best practice examples are:

- Federated models for learning which train on distributed data without moving it around.

- HIPAA compliant encryption for all stored and interchanged health information.

- Automated anonymisation protocols cleaning datasets before AI model training.

8. The Future of Guardrails: Building AI That Deserves Our Trust

Speed, precision, and size alone won’t measure the success of AI in the future. The important metric will be trust. In a time when life and death decisions are made by machines affecting billions of people, the next revolution won’t be caused by cleverer algorithms, but safer ones.

As AI becomes more autonomous, the privacy guardrails and “safety guardrails” must move from static systems to dynamic systems – systems that are learning, adaptive systems that are responding — at any given time — to ethical and social, and regulatory changes. The objective is not to stifle innovation, but to ensure that that innovation is good for mankind, and responsible.

This is what that future looks like.

8.1 Federated Learning & Synthetic Data: The Next Frontier of AI Guardrails

For years traditional AI has relied on centralized data collection, leveraging huge quantities of disparate data sources funneled into one source for training. While this paradigm yields strong performance and accuracy, it also creates great risk of exposure, for even a single breach could involve millions of records. The next iteration of the AI architecture emerges in the milieu of federated learning, that is, a decentralized training process that enables algorithms to learn cooperatively across many devices or servers without sharing raw data. However, only model updates or insights are swapped, so no sensitive data is in danger of leaving its nest. This paradigm will also dovetail perfectly with the modern guardrails, the era of “AI guardrails,” that will ensure that data sovereignty is preserved while advantages of continuous improvement are maintained.

This paradigm will be augmented by the motility of synthetic data, that is, fabricated data sets give rise to real-world patterns and variability which do not contain real personal or proprietary information when combined with strong (AI) data security, ethics and safety systems. The synthetic data enforces a very positive innovation paradigm, so that the AI can train, test, refine AI models, while improving fidelity, without breaking the seal of privacy. Together with the federated learning, the synthetic data paradigms will redefine the tensions between accuracy, confidentiality and compliance, to yield an AI milieu which is smart and fundamentally secure.

8.2 Zero-Trust Systems and Encryption Evolution: Rethinking security guardrails

The traditional cybersecurity model is based on trust — assuming that internal systems, once they are vetted, are safe. But as AI-driven ecosystems become more interconnected, this is a dangerous assumption. Enter zero-trust architecture, the future of “security guardrails.”

In a zero-trust system:

⟶ Each user, device, and application must constantly prove its legitimacy.

⟶ No interaction is assumed to be trusted, even among internal networks.

⟶ Continuous AI monitoring makes sure that each access point is checked in real time.

This framework converts cyber “security guardrails” not into static walls but intelligent filters, which constantly assess behavior patterns, discover anomalies, and automatically deny access.

Coupled with advances in quantum encryption and homomorphic encryption, the future of AI data security will be computation on encrypted data, allowing for privacy without ever needing to expose raw data.

8.3 Global Frameworks & AI Ethics: The Expansion of privacy guardrails

The discussion surrounding AI safety is no longer limited to just tech companies — it has become global policy. Governmental bodies, international organizations, and ethical research entities are collaborating to establish global standards for responsible AI development.

It is frameworks such as these that are leading the way:

- The NIST AI Risk Management Framework (US): describes core functions to govern, map, measure, and manage, to make trustworthy AI operations.

- The EU AI Act: introduces prefix of risk-based classification and accountability for AI systems, including privacy guardrails directly in law.

- ISO/IEC 23894: A global technical standard for AI risk management and ethical compliance.

Such efforts represent a fundamental shift from self-regulation to a global alignment. Future systems will need to align not only with conformity in one region — but with a universal baseline for agitation of ideals and safety designed to protect human beings everywhere.

This strengthening will result in AI guardrails not only as technical things, but also in diplomatic areas between humanity and its machines.

8.4 Privacy as a Competitive Advantage in the Age of AI Guardrails

Previously viewed as a regulatory hurdle or cost center, privacy is now a potent competitive asset. Sixty-two percent of consumers and enterprises of the next generation now consider trust to be more valuable than traditional patterns of dominance in the market and gravitate instead toward brands that protect their data as fiercely as they promote innovation. Companies that implant privacy guardrails and security guardrails into their design work flows from the very beginning are beginning to reap benefits substantially greater than those which are associated with being compliant. These benefits include trust and loyalty to the brand which is deepened because the users become attached to platforms where they feel safe; quicker acceptance of new products made possible by virtue of the diminished friction created by transparency in the policy regarding privacy; and less risk in the operational areas of the business due to the accretive concern for security of the platform losses due to breaches and reputational damage.

Sophisticated companies have come to the conclusion that ethical innovations sell. The most successful players in the new AI environment, whether participants in the cloud segment, health technologies sector, etc., are starting to fuse AI ethics and safety more directly into their business DNA. They show that integrity is not a limit to growth, a hindrance, but a factor which facilitates growth. In this new digital economy we live in, trust spontaneously is re-created through good technology; it is the technology. The basis of the trust is something called “AI guardrails.” These are the conceptual principles and protection which allow innovations to bloom, but which preserve what is important — confidence in humans, security in humans, respect for humans.

9. Conclusion — Privacy Is the New Trust Currency

For too long, the promise of technology has been about progress. Faster processes, smarter machines, and limitless connections. Each forward step we realize a sobering truth: innovation without integrity is impossible. The point has been reached in the digital age that the question is not how much data AI can process, but how it can do it more safely.

In this new age, privacy is not just a box to be ticked. It is the foundation upon which the digital world is based; it is the currency by which people know how to trust, interact with, and accept technology. And just as monetary systems need vaults, AI needs social integrity guardrails. These invisible boundaries protect our personal, financial, and biometric individuality from being misused, and safeguard that never at the risk of self respect will progress come.

Strong social security guardrails now have as much significance for innovation itself as the algorithms. They do not impede the operation of AI, they keep it stable, safe and efficient. In an eco system where the leakage of one piece of data can spoil years of credibility, social guardrails have now become the true vernacular of maturity. Companies that consider privacy optional will soon fall behind those who develop it as a form of competitive edge.

The growth of the AI guardrails marks a huge shift in our definition of intelligence. True intelligence does not reside in what machines can absorb, but in what they prefer not to know. The next stage of AI is not teaching machines to "think", but to respect. It is about the making of technologies that know all about context, permission, and finality of consequence.

All through the commercial world from the most hi tech to the least we see things changing — a movement from reactive protectionism to positive responsibility. Privacy first concepts are something quite different today from the previous add-on and marketing patter. They are the ethics inside product. They govern how the systems are educated, how the data is used, how the trust is won.

The next chapter in the AI story rightly belongs to those people who we call builders — the engineers, the law makers, the government leaders, who know that AI ethics and social viability are not constraints on development but the all-important catalytic influence. Every layer of added social and commercial guardrails adds to resilience in an ailing digital world. Each boundary which we respect allows us the liberty to be bolder in our innovation.

The lesson is simple: the technologists who survive are those built to be trusted. Privacy and physical security are not opposite forces to intelligence, but perhaps the greatest demonstration of maturity in that field. As the AI model is developed — one which is consistent with human experience, we do not merely create technical knowledge. We provide for the advance of humanity.

So, as we reach the borders of a world created with artificial intelligence, one element must be precluded.

guardrails do not inhibit invention — they safeguard its very existence.

They form the channels between progress and protection, the silent lines along which intelligence is made human. The trend seekers and leaders who glory in their perception of social security, AI guardrails and commercial milli security, will write the definition of those technologies which breed trustworthiness for tomorrow — those based on digital empathy rather than the exploitation of knowledge.

In the world to come, privacy no longer remains a decorative feature. It is the language of trust. And trust is always the very essence of intelligence. What is being attempted here is a realistic definition of guardrails, social and commercial, privacy and safety, and not just the buzz words and phrases. Together with security guardrails they form the only channel along which reserved intelligence can reach maturity. Available works at long last links closely to reality and effective speaking. No one needs to be upset, for the rewards are, after all the outcomes desirable both publicly and privately.

.svg)

.png)

.svg)

.png)