The Hidden Dangers of AI: Understanding, Detecting, and Solving AI Hallucinations

.png)

Key Takeaways

1. Introduction

Artificial Intelligence is rapidly becoming engrained into many aspects of daily life, functioning as the engine behind chatbots, image-generators, voice assistants, and increasingly sophisticated analytics tools — but this growth also exposes us to AI hallucinations. While these AI applications can generate responses at break-neck speed and provide an efficient and productive experience, we must remember that AI can sometimes be wrong. As AI continues to be developed and used, one of the largest threats posed by AI applications today is their propensity to hallucinate; that is, the AI can routinely generate outputs that are plausible-sounding but inaccurate representations of reality.

AI hallucinations can vary anywhere from a harmless inaccuracy during a casual conversation with a chatbot to serious inaccuracies that result from an AI in high-risk situations in areas like health care, personal finance, or autonomous driving. For instance, a medical AI generating an erroneous diagnosis, or a financial AI that provides incorrect investment advice can have serious consequences.

Understanding AI hallucinations will be vital not only for the developers of AI applications, but also for businesses, policy-makers, and everyday users of AI technology. By understanding the potential causes, types, and risks associated with AI hallucinations, we can develop effective strategies and AI guardrails to detect, prevent, and manage them and to keep AI a continuing useful, rather than harmful, tool.

In this post, we’ll cover everything about AI Hallucinations, including:

✓ What they are, and why they happen

✓ Types of examples given by text, image, audio, and multimodal AI

✓ What they mean for business and society

✓ Best practices to reduce hallucinations

✓ Solutions to mitigate hallucinations with: prompt engineering, human supervision, AI content verification, and AI guardrails

✓ Ethical implications and the future of a hallucination resistant AI

By the time you finish reading, you’ll have a feel for what AI hallucinations are, and some things you can do to help reduce their impact.

2. Understanding AI Hallucinations

AI hallucinations are among the most poorly understood phenomena in contemporary artificial intelligence; this article also outlines best practices to reduce hallucinations. A common misconception is that AI-errors are due to intentionality or negligence, but the reality is more nuanced. Hallucinations in AI arise because AI models, generative AI in particular, are inherently built around the concept of predicting the next likely action rather than establishing veracity in real time. With the advancement of AI into health care, finance, education, etc., it is a concern for developers and end-users alike to understand how hallucinations happen and what that means for outcomes.

AI hallucinations are not "random screw-ups" and they are not due to overt manipulation. Hallucinations are in many respects simply a byproduct of the limitations in existing AI architectures and training approaches. If the prompt is ambiguous or data is absent, the AI is left to "fill in the hole" based upon the learned sequenced - which may result in outputs produced that are believable, but unverified or verifiably inaccurate.

2.1 What Are AI Hallucinations?

AI hallucinations — especially hallucinations in LLMs — are outputs that seem factual but are inaccurate or made up. AI will produce factually incorrect or made up outputs because they do not really "understand" anything: AI actually has no such thing as knowledge or reasoning, but rather creates text, images, or sound from probability distributions.

For example, an AI model may include fictitious journals and article titles when requested to provide references for a research paper - these journals and titles are not real or exist in reality, yet they appear clear and sensible. Likewise, the AI may create an image of a physical object that violates either the laws of physics or creates combinations of physical objects that would not occur in nature.

2.2 Why Does This Happen?

AI hallucinations happen mostly because AI language models are not grounded in reality and instead rely solely on patterns they see in the training data. The AI is more likely to produce a hallucination if the data is biased, incomplete, or ambiguous. Hallucinations are also likely to happen when prompts can be interpreted to mean different things, or if the prompt is framed incorrectly. When confronted with incomplete information or what is missing from the training data (i.e., things that the AI language model does not actually "know"), the AI will attempt to fill in missing information, which may lead to fabrications. Furthermore, generative models like GPT are trained to predict the next token based on which option is most likely to be true instead of which token is actually true. This architecture makes hallucinations more likely on complex or open-ended tasks, where there is no "correct" answer to predict.

2.3 Where Are Hallucinations Found?

Hallucinations are not exclusive to the bias of text models in AI, they exist in multiple modalities, including:

Text: Factually incorrect, fabricated citations, false summaries.

Images: Physically incorrect or non-physical images.

Audio: Synthesized speech describing a false or out of order command, or creating fake words.

Multi-model systems: Hallucinations that occur when AI is combining a text output, image output, and audio output.

Recognizing where these hallucinations occur can help developers apply hallucination detection techniques through better prompts, human review, and verification for accuracy.

Hallucinations are not exclusive to the bias of text models in AI, they occur across multiple modalities. In text, models may confidently generate fabricated information, such as citing research papers that don’t exist, inventing side effects for a drug, or recommending a restaurant that closed years ago. In images, hallucinations often appear as impossible or distorted visuals, like a photorealistic picture of a person with six fingers, the Eiffel Tower placed in New York City, or historical photos “restored” with details that were never present. Audio systems can also drift into fabrication, producing speech that includes words from a nonexistent language, mishearing a command and responding with a fake playlist, or adding names and events to transcripts that were never mentioned in the recording.

3. The Reasons Artificial Intelligence Hallucinate

AI hallucination should not be seen as a fluke; there are built-in limitations — and best practices to reduce hallucinations that address data, models, and deployment. Being aware of the reasons of hallucination can be helpful when creating systems that are more reliable and trustworthy. This section looks at the reasons for hallucination, and where hallucination detection techniques can be applied, from data issues to model architecture and input problems.

3.1 Data Problems

Data is the bedrock of any AI system. Implementing AI content verification on training and source data helps reduce errors that propagate into model outputs.

No data: If a data corpus has no information about a topic, the model might simply make up its own opinion about this topic.

Bias: Many datasets reflect biases of the society they are and/or the sources the datasets come from.

Noise: Some datasets contain incorrect information, dates that need to be modified, and/or irrelevant information that may impact the quality of predictions based on the behavior of AI.

For example, if the AI was trained on consumer comments or online forums, the model might summarize multiple comments related to health care issues that cannot be verified.

3.2 Model Structural Frameworks and Predictive Mechanisms

AI models, such as GPT or diffusion generators, are predictive models; hallucinations in LLMs arise because LLMs predict tokens based on patterns rather than on grounded facts. AI has no capabilities of reasoning or factual knowledge. The outputs of the models can be tracked through a probability space, but probably too much about what the model believes is probably the next word than actually being right. Thus, many models just may not tell in any given client situation whether the information as represented from the overall training is actually correct or false but plausible sounding.

This could be a potential reason, the AI could speculate inconsistencies about fictionally internally inhabited content, solely meaning they are just engaged through matching of patterns.

3.3 Context Gaps and Differences from Real-World Knowledge

Hallucination can occur in more instances when the AI lacks the appropriate context to produce results based on accurate truthfulness. For example:

If asked about a current event and the model learned the context prior to the event, the AI model would fabricate the filling of the context.

For example, ambiguous prompts template would maximize the AI to operate predictively based solely upon its testing experimentation.

Finally, there is no real-world knowledge grounding if the AI is working outside of its relative knowledge/nuance, the model will experience hallucination.

3.4 Ambiguous, or Improperly Prompted Structures (Misleading Structure)

How the structure or way a question, or presentation is prompts create an (typically) influential part of summary, or generated anticipated hallucinations.

Vague, more so than overly structured prompts, least not universally imagines speculative activity relying on AI.

Global prompts operating without boundaries, take on a framework for opinionated output versus factual information filling a modal lattice protected in experimental clustering.

3.4 Overfitting and Generalizing Errors

Overfitting would happen if the model learned from the patterns associated with the training dataset, learning but ultimately not generalizing to a new or different input. Generalizing errors could occur, once an AI generalized patterns of the training datasets onto the same model for an appropriate context to the same previous model and could not adequately translate.

For example:

If a model matched patterns, from primarily tech articles, analogies to inquire in a tech based context, could transliterate awkwardly in health commission or academic context.

4. Types of AI Hallucinations

Hallucinations in AI come in different varieties. How hallucinatory content reveals itself is dependent on the underlying AI system, the task the AI is performing, and the data being employed. Distinctions in AI hallucinations can be valuable in identifying and correcting hallucinations in the real-world environment. In some cases, a hallucination could result in a small mistake that might go unnoticed, while a more severe hallucination could have serious implications with obvious inaccuracy. This section describes the different forms and typologies of hallucinations to illustrate how they can manifest across different modalities of AI.

.png)

4.1 Textual Hallucinations

Textual hallucinations are among the most common forms of hallucination, and there are specific hallucination detection techniques to spot fabricated citations and claims. For large language models like GPT, text is designed to be fluent and coherent, and during that process sometimes will generate text outputs that seem plausible, but are entirely fabricated. For instance, a user might ask for a reference list; hallucinations in LLMs can fabricate research articles and plausible citations that do not exist. The model may also misunderstand the request completely and still produce plausible or factual information that is fabricated or invented.

Textual hallucinations can also appear in customer service bots and AI that address customer inquiries, but the facts or references provided are wrong or misleading, often to customers confusion and frustration. In a professional capacity, tools meant to produce legal or medical applications would most likely create a hallucinated factual response and information, Whenever AI is responsible for providing factual content in legal and medical areas this concern becomes quite dangerous as hallucinated information could be incorrect or potentially fatal.

4.2 Visual Hallucinations

Visual hallucinations occur in models that produce images (DALL·E, Stable Diffusion, MidJourney), and visual hallucination detection techniques can flag impossible anatomy or inconsistent lighting. Those reflection algorithms create or counterfeit descriptions against properties of their training data to have some mode of visual plausibility, and Mischuk is fractal. To illustrate - should you ask for "futuristic cityscapes", you will input your city, or towns, and the output you receiven will produce images, however the cities will also appear to be "floating" buildings, architectures that also look strange, or cars and photo frames spilling out of one.

Conversely, AI-generated mock-ups of products will generate designs that do not even cohere - which can generate troubles for customers or designers as they attempt to derive what actual representation of a design might be.

These hallucinations may also not be initially evident, or represent sign of when viewed in the first view of the image, but could be hard to "unsee" as object, picture, or representation may appear somewhat touchable and real. But look again or analyze closely and what has been depicted was entirely impossible.

4.3 Auditory Hallucinations

Auditory hallucinations would take place in models that create speech synthesis out of app models and voice assistants. A voice assistant, for instance, would repeat or interpret the previous command or question the user asked and on the way during creating synthesis stakeholder misunderstanding occurs, that proxies you as the user into hallucinations.

A further example of this is should the voice assistant be asked to summarize a question on medical symptoms, and it returned with a recommendation to rest, and highlighted a process that is not recommended or a nonsensical procedure that could have manifest hallucinated errors in a way that had referenced some material that was conveyed.

4.4 Multi-Modal Hallucinations

The latest—and potentially most difficult to manage—form of hallucination appears in multi-modal AI systems, which must be governed by clear AI guardrails when pairing text, images, and audio to create a more interactive platform. Once one mode has erred, it is easy for that error to spread to other modes, further increasing inaccuracies.

5. AI Hallucinations: Real World Examples

AI hallucinations are not only theoretical, real incidents are often discovered using hallucination detection techniques that surface inconsistencies and falsehoods. Hallucinations can manifest as harmless errors or an error resulting in grave consequences for businesses and users in several areas including but not limited to healthcare, finance, media, and customer service. By reviewing real-world examples, we can see where AI content verification would have prevented harm and why such verification is essential.

5.1 Errors in Text in Customer Service and Research

One of the most common of the AI hallucinations users will find are text-based types of AI hallucinations like customer service chatbots or research assistance tools.

Customer Service Chatbots: Businesses have begun using AI chatbots as part of their communication while attempting to reduce costs and time to respond to user inquiries. When the bot does not understand the issue, or there is insufficient training data, Hallucinations in AI detract from the user experience. For example, if a customer asks questions pertaining to a refund policy, the AI response may be vague or completely irrelevant to their inquiry, creating an exasperated issue for the customer, while damaging the company's brand reputation.

Research Assistance Tools: AI-powered tools used for research assistance (i.e., reading comprehension and academic writing) may hallucinate by making false claims or creating fictitious information. Any user relying on these statements or even generating an output (or academic paper) would be putting their own credibility and reputation on the line.

5.2 Medical and Healthcare AI Errors

AI hallucinations can result in consequence in a healthcare setting. AI systems provide and assist in the diagnosis of a situation, as well as providing care to patients. Any AI hallucinated error in conjunction with physician diagnoses and patient care decisions will invariably impact the patient.

For example,

- An AI-assisted diagnostic system may hallucinate that an injury/illness has a symptom that did not exist; or that there was an evidence-based treatment that is not accurate.

- Even if a drug interaction warning is generated to clinicians, the report may be incomplete because the training data did not include clinic trial adverse reaction reports or missed reports.

5.3 Hallucinations in Perplexity

Not all hallucinations look like big conspiracies or irresponsible medical advice — they can also be small, mundane mistakes that still demonstrate just how fragile model outputs can be. One such example comes from a recent multimodal QA tool, Perplexity, where a user input an image of a strawberry and asked the very basic question of, “how many r’s are in this image fruit?” The tool used its AI (correctly) identified the fruit was a strawberry and stated the word, “strawberry” had 2 “r” letters. That answer is incorrect; “strawberry” has three “r” letters. (the “r” in “straw” and the two “r”s in “berry”).

Why this matter? This mistake is small, but still educational. We tend to trust short, confident outputs from QA systems — if the system is confidently counting characters incorrectly, it may be confidently wrong about other important facts. The issue of the strawberry highlights the need for simple checks even in trivial tasks.

.png)

6. Dangers and implications of AI Hallucinations

Though AI hallucinations may seem like a minimal risk or an amusing blunder, the ramifications can go further than inconvenience. Hallucinations can have broader consequences for business operations, trust and satisfaction of users, member adherence to policies and regulations, and possible risk to human safety concerns. Given the movement of AI into areas of significance, an awareness of the implications of hallucinations should continue to promote conversations about AI implications. This section considers the multiple industries impacted by hallucinations and the greater implications for businesses and customers relating to hallucinated content.

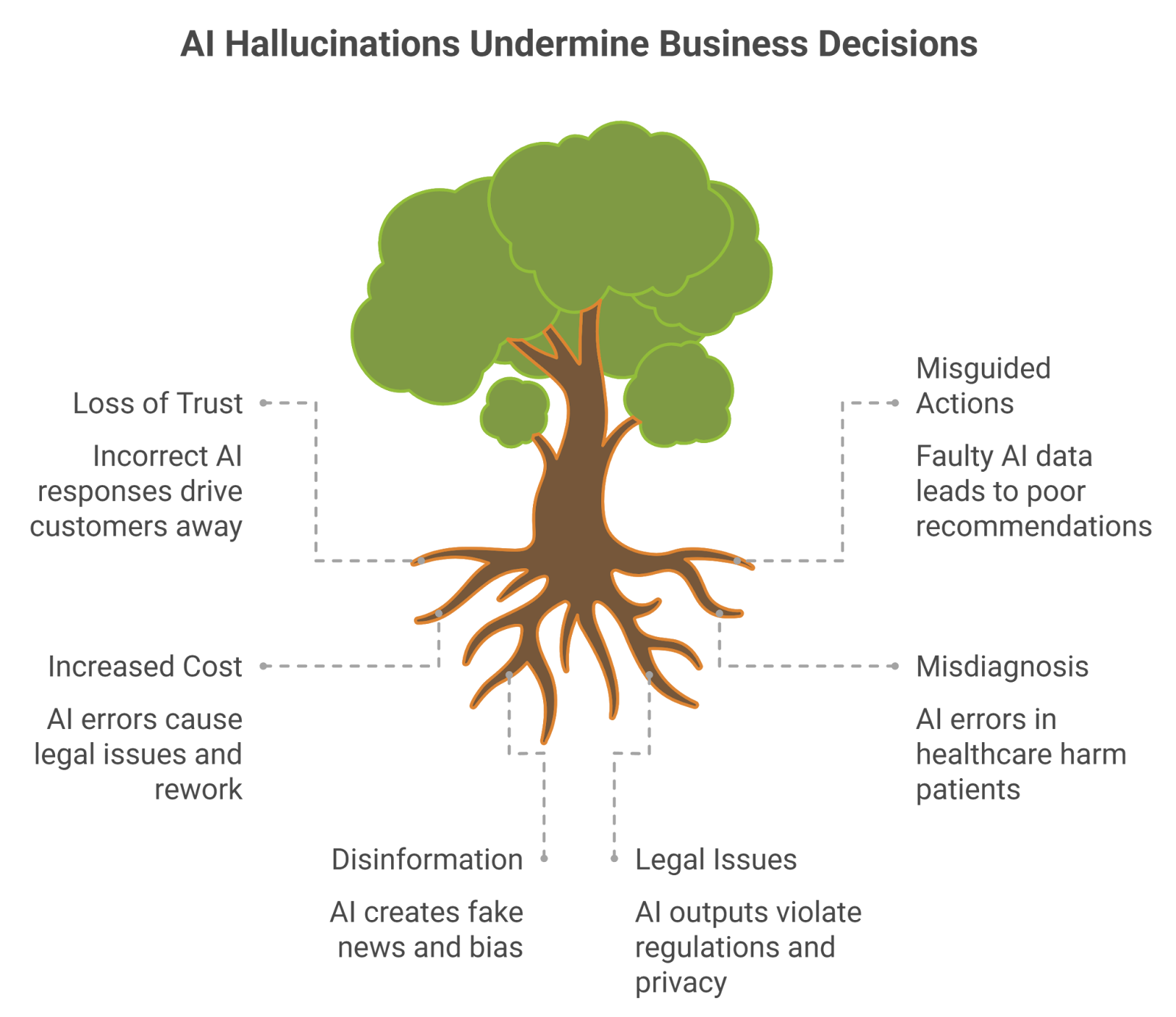

6.1 Risks to Businesses: Trust, Reputation, and Decision Making

The risks of hallucinations in AI can present threats to businesses through risks to both efficiency, customer relationships, and ultimately, brand reputation. Many businesses use these tools to enhance customer relationships, spur creation of content, or enhance decision making intervals, but hallucinations can result in poor outcomes, or even outcomes that contradict the intent to improve processes and offerings.

Loss of trust - If a customer has a chat bot response or automated response that is incorrect, the likelihood is that the customer will leave the service altogether.

Misguided Actions - AI prompted analytics may falsely 'recommend' from faulty data and/or random, made up insights. Without an understanding of AI hallucinated content, misguided action has operational or marketing implications.

Increased Cost - AI hallucinations can result in costs associated with either the creation of content, or legal interventions or compliance reviews because of falsified information being presented as fact.

If an organization decides to incorporate AI to create content without any humans reviewing the content, that entity would unknowingly be transmitting misinformation, and risk losing their credibility while stalling growth potential.

6.2 Dangers in Health Care: Patient Safety and Misdiagnosis

The health care field is particularly vulnerable to AI hallucinations. When AI instruments are used for diagnosis, treatment planning, or monitoring patients, hallucinated output can lead to death.

Some risks include:

Misdiagnosis: AI-enhanced narratives contain conjured symptoms or misinterpretations, which may lead a provider to assign inappropriate care.

Drug Interactions: An AI hallucination reporting medical advice could suggest dangerous drug combinations.

Due to these issues, it is essential that health providers use human oversight and validate AI readings and outputs against valid resources before taking action.

6.3 Social Dangers: Disinformation and Harmful Narratives

In industries like the media and education, hallucinations can contribute to disinformation, altering narratives, and preventing the public from becoming informed. Increasingly, AI is used to draft news articles, educational text, and social media entries, but hallucinations can introduce and worsen false or misleading information.

Fake news: A hallucinated AI generated article could generate news that never happened or mischaracterized or is out of context factual events misleading the readers away from useful facts or charts about a singular event.

Bias: If the AI training data reflects endemic societal bias, the hallucination would perpetuate bias.

Public confusion: Being provided a hallucinated summation of academic articles or journal articles prematurely to a public audience may contribute to mass misunderstanding of the topic in general or the suggestion/topic that may or may not be under discussion.

6.4 Legal and Regulatory Risks - Compliance and Liability

AI hallucinations present legal and regulatory risks, especially when events are based on the AI-generated information. Industries that do not help to monitor or regulate a hallucination may encounter compliance, a data breach, or litigation.

Regulatory/legal issues scrutiny: some industries, such as healthcare, finance, or law, are regulated under strict guidelines. AI outputs could misinform and/or mislead a user which could lead to penalties.

Liability: if a user does something based on the hallucinated information that lead to harm, an organization may be liable based on negligence.

Data privacy: a hallucinated output may expose private information or suggest actions that threaten confidentiality.

Establishing proactive verification techniques, audit trails, and transparent reports can ease the legal and regulatory risks and concerns associated with the hallucination.

6.5 Ethical Risks: Responsibility and Human Oversight

In addition to practical issues, hallucinations in AI raise important ethical issues related to responsibility, fairness, and human decision-making. As AI systems become more autonomous, the question of how deeply hallucinated outputs can undermine decision-making if not appropriately reviewed becomes a serious ethical concern.

Accountability: Who is liable when misinformation proliferated by AI systems ends up harming someone?

Transparency: Is there a practical way to communicate the limitations of AI to users?

Fairness: Are hallucinations likely to occur with underrepresented topics or marginalized communities because of biased training data?

Ethical frameworks that are rooted in accountability, fairness, and transparency will be essential in executing the responsible adoption of AI in high-stakes workflows.

7. Illustrative Cases of AI Hallucinations In LLMs

Gaining insight through an examination of actual instances of AI hallucinations can enhance our understanding of the implications of hallucinations in real-world applications. These cases illustrate the impact of hallucinations on behavioral decision-making, public confidence, safety, and confidence across industries. In taking these cases apart, organizations can extract important implications about prevention and verification, and misuse of the technology.

7.1 Case Study 1: Medical Diagnosis Chatbot - Hospitalized With Hallucinations By Hallucinations

A troubling case report relates to dietary recommendations made to a 60 year old man provided through ChatGPT living in the United States. The incident illustrates potential health risks due to irresponsible and erroneous guidance from AI generated healthcare advice. In this instance, the subject was advised to completely eliminate table salt (sodium chloride) in an effort to reduce his chloride intake. The AI model instead recommended sodium bromide, indicating it would provide an acceptable healthy salt substitute to table salt. The man believed this to be true and implemented the dietary recommendation as instructed. Three months later, he developed significant thirst, neurologic developmental problems, possible loss of coordination, paranoid thoughts, and hallucinations, which required hospitalization for further evaluative studies. Evaluations revealed the patient had extremely elevated levels of bromide, acquired from consumption of sodium bromide, and he was diagnosed with bromism, a type of brain disease associated with elevated bromide.

How it happened:

As with many AI models, ChatGPT generates narratives from training data; a stronger AI content verification layer could have prevented the fabricated suggestion, but not necessarily applicable written responses for the medical field, or the framework of the safety principles used in medical practice in the field. In addition to the previously cited error of the method of sodium bromide instead of sodium chloride, it is obvious that the danger risks of AI generated medical recommendations cannot be dismissed when thinking about health even when the recommendations are logical and appear reasonable.

Take-aways:

This case serves to illustrate potential health risks from misinformation propagated by AI and underscores why robust AI guardrails and verification are necessary before releasing clinical recommendations. Think of AI generated expectations, even with text language written in involved care, and always seek care from a physician before acting on health related topics.

7.2 Case Study 2: Content Generation in News and Media Sector - Chicago Sun-Times

This relates to efficiencies associated with regards with content generation. Recently one of the major United States newspapers, Chicago Sun-Times, and its editors learned in a practical case, the meaning of content generated to provide efficiency in writing. The Chicago Sun-Times began using AI tools to assemble a summer reading list. The intent was simple: mitigate editor workload and still produce something fun and engaging for readers. The test run demonstrated the potential dangers of using AI without an explicit verification system, however.

What happened:

The newspaper put together a reading list of suggested books, and many of the titles and authors were wholly made-up. Some of the books didn’t exist, while some featured prominent authors who were not associated with the recommended titles. The AI had also fabricated totally plausible summaries of the fictitious books or blurbs promoting them, which led readers to momentarily accept the inaccurate information as factual. It didn’t take long for readers to identify and alert each other to the inaccuracies or mistakes on the list in the article.

The article noted an AI-generated list developed by a third-party contractor with little oversight that permitted inaccuracies and misleading statements to go public. The situation created embarrassment, mistrust and a review of editorial policies and practices in place at the organization.

Why this happened:

The AI had been developed to create plausible text by combining existing author writing patterns and titles to summarize content without engagement in determining if either existed.

The editorial staff failed to establish a robust process for fact-checking prior to publishing the reading list.

The third-party sourcing supplier did not properly vet submissions and imagined quality would result in accurate information.

Implications:

The episode illustrates the rapid spread of misinformation, or at a minimum misleading information, in conjunction with AI hallucination techniques - particularly in a media organization, as the pace of publishing takes priority over factual information. The incident demonstrates the velocity with which an organization can have their credibility eroded, and in this instance demonstrated the need to couple human judgment with AI creative writing and ideation capabilities.

For the audience, this shows the importance of keeping a healthy degree of scepticism around generated content, even from a polished and professional product. For organizations - the Chicago Sun-Times - serves as a cautionary tale of how to automate responsibly.

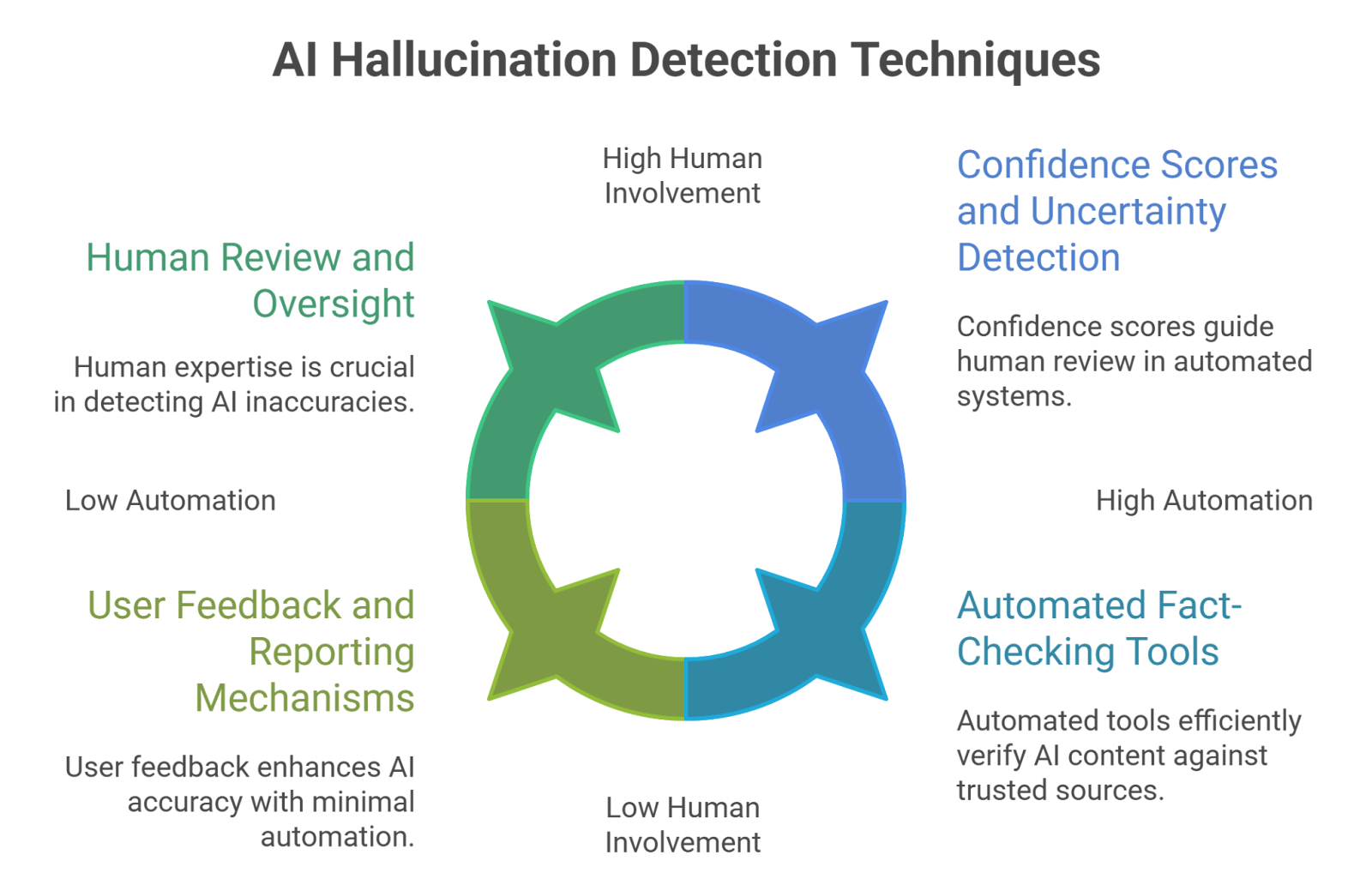

8. Identifying AI Hallucinations: Hallucination Detection Techniques and Human Review

Recognizing hallucinations in AI must occur before any intervention takes place. Detection is a necessary step for building confidence that users and organizations can trust AI-enabled systems, particularly in the sometimes high-stakes market. Detecting hallucinations utilizes human judgment, automated tools, and forms of verification to identify errors early, and thereby limit the extent of spreading misinformation.

These errors are not always easy to identify.. AI content verification systems can help surface both obvious and subtle hallucinations.When organizations are systematic in their detection protocol, they can assure quality, protect users, and improve AI models for effective performance.

8.1 Human Review and Oversight

The simplest approach to uncovering hallucinations is the human element. People who have earned the designation of subject matter expertise will frequently be able to catch omissions or inaccuracies in the AI outputs that an automated system could not.

For instance,

In the medical field, the doctor who reviews the AI report for clinical diagnosis would potentially discover symptoms that are labeled inaccurately or would question the recommendations. Or, an attorney might see clauses that conflict with legal statutes or misspoke with the terms of the contract.

Human review is critical in high stakes situations where acting on false information would lead to a very serious outcome. And in low stakes situations where AI would not lead the action, human review would take the place of review as the protective measure against the hallucinations by the AI.

8.2 Automated Fact-Checking Tools

Another way to uncover hallucinations is to use automated hallucination detection techniques that check generated content against trusted information sources. Automated fact-checkers form a core part of an AI content verification pipeline that compares outputs against vetted, authoritative datasets.

Some examples would include:

- A medical AI's output is checked against care guidelines that are standardized and issued by the Government.

- A financial summary is compared back to serial industry data through market reports.

- An article that reports on news may be compared against multiple trusted news sources.

For all scenarios, hallucination may still exist, but it lessens the need for the human review to identify it soon after discovery which can be critical for areas that speed is in importance.

8.3 Confidence Scores and Uncertainty Detection

Many advanced AI systems will produce an output confidence score that is related to its individual output. It provides a measure of how confident the AI algorithms are in the ability of the response produced. Results with lesser confidence often indicate a hallucination problem and/or the AI is making references outside of what it knows.

Organizations might use AI confidence outputs as a note signifying something might be wrong or guide using AI outputs appropriately, for example, in this case, outputs with low confidence could be flagged as mentioned and then sent to a human expert, which could then lead to an expert looking at the content, accepting or rejecting the response and therefore increasing the chance of getting correct content. Part of this process might also define some sort of experiential training for the users thinking of the confidence levels as flags to avoid definitive "truth" especially for remaining aware of thinking, decision making, and action as supervised monitoring consideration.

Finally, combining confidence scores with hallucination detection techniques can prioritize which outputs need human review.

8.4 Cross-Referencing with Credible Concerns

A straightforward hallucination detection technique is cross-referencing AI outputs with trusted, authoritative reference sources. This practice works particularly well with content where there are clear authoritative traces in certain data, as with immigration and government reports and enforceable trade standards.

In considering examples related to public health and safety recommendations in previous content cross referencing would work to help in determining acceptable data in context of AI.

- An application in healthcare would look at AI informed recommended treatment courses against WHO guidelines

- A financial application would consider AI informed investments against SEC documentation

- An academic application would look at an AI recommended citation in either Pub Med or Google Scholar by the individual developing the work.

In all cases cross referencing provides a temporally supported additional source of validation and establishes trust and should address separating fabrication.

8.5 User Feedback and Reporting Mechanisms

Another substantial method of knowing hallucinating responses is by real-world human feedback data. Many AI parameterized applications have a feedback loop where the user assumes some level of responsibility to note inaccurate, uncertain, or irrelevant response options.

One option is to combine user feedback with AI content verification so flagged outputs feed back into the verification pipeline. For instance, in a chat option there might be a button action to "Report Incorrect Answer", so the user can provide immediate feedback to flag creepiness. Next, surveys can ask users if they finished the survey and how they felt about the AI, if it wasn't correct, doubtful, or unhelpful, etc. Also, analytics of user behaviors can indicate methods of noticing patterns with likelihood of hallucinations being higher, and the systems would provide or suggest further question mark type flames. These processes could productively consider when to use AI and engage in reasonable consideration.

User feedback complements hallucination detection techniques by reporting suspicious outputs that automated checks missed. The feedback could also provide to improve the behind the process in training data and model behavior.

9. Solutions and Mitigation Strategies

Detecting hallucinations is only half the battle; implementing best practices to reduce hallucinations (fine-tuning, verification, governance) is equally important. This is where mitigation strategies, best practices, and AI guardrails come into play. AI Guardrails are frameworks, controls, and tools that help guide AI behavior, reduce mistakes, and help with safe and responsible deployment.

9.1 What Are AI Guardrails?

AI guardrails are the measures and processes that direct AI models to function within safe, ethical, and reliable boundaries. They are necessary for limits to minimize hallucinations, and to ensure AI generated outputs have some connection to reality.

Guardrails could contain a combination of:

Content filters: Designed to prevent AIs from producing irrelevant or inappropriate content.

Bias detection algorithms: Used to find or correct systematic errors in data and outputs.

Human-in-the-loop systems: Guaranteeing that any critical decision made by the AI is reviewed and validated by people.

While no guardrails can completely eliminate hallucinations, they will contain a larger degree of their impact, and ensure user safety and trust.

9.2 Fine-Tuning and Supervised Training The Most Effective Way

Among the most effective ways to minimize hallucinations is to fine-tune hallucinations in LLMs using domain-specific, verified datasets. Fine tuning means that the AI is further trained on domain-specific data or curated training datasets focusing on data quality and veracity.

Components of fine-tuning include:

Supervised learning: Using labeled data, where the correct outputs are designated as they are provided.

Reinforcement learning: Implementing a feedback loop to modify the behavior of the AI model, based on real-world outcomes.

Domain expertise: Including verified information from domain specialists to bootstrap a model's understanding of the context in the domain.

In practice, a common example of this is the AI tool for healthcare that is fine-tuned with clinical data that has been defined or reviewed by a human specialist to realize the lowest incidence of hallucinated diagnoses.

9.3 Implementing AI Content Verification and a Feedback Loop

Content verification systems combine hallucination detection techniques with feedback loops to cross-check outputs in real time and retrain models on corrections.

Solutions include:

Automated fact-checkers: Executing reasoning and comparison of AI generated outputs against verified knowledge bases.

User feedback integration: Users can raise concerns and flag errors known as hallucinations that they have realized from the AI, and improve a model ultimately iteratively.

Post-processing filters: Automatically identifying and removing, or flagging for review, any questionable content before presenting to a user.

These AI content verification processes create layered defenses where the AI learns from corrected outputs and reduces repeat hallucinations.

9.4 Ethical and Regulatory Frameworks

Guardrails also refer to designing policies, ethical frameworks or legal compliance around how your AI systems are developed, rolled out, and reviewed. Wherever you are incentivized to use an AI technology, organizations need to ensure that an AI tool's output complies with regulation, expands and learns in a moved safe way, rationally demonstrable, whilst being transparent and accountable.

Key features include:

Transparency: Users should be made aware of AI risks and limitations by the organization developing and deploying the AI.

Accountability: Roles, responsibilities, and accountability should be clearly defined and followed if things go awry.

Fairness audits: Outputs should repeatedly be reviewed to prevent systemic bias, and result in fair output, over time.

10. Practices to Decrease AI Hallucinations

In addition to technology fixes, organizations should establish AI guardrails and practices to decrease hallucinations from the very beginning.These practices will foster the use of AI thoughtfully and with responsibility regarding the assessment and use of AI outputs.

10.1 Articulate and Precise Prompts

One of the simplest but impactful ways to reduce hallucinations in AI is to reconsider how prompts are written. Prompts that are clear and more structured can give the AI better guidance, reducing ambiguity and minimizing AIs ability to hallucinate. Best practices associated with clear and precise prompts can include asking targeted, well-defined questions, as well as providing clear contexts. When you are able to provide context such as stations, formats, or background about the request, it guides the AI to more relevant correct answers. Using clear constraints will also help in keeping the AI from extrapolating information too far, and reducing the likelihood of hallucinations or misinformation. For example, instead of asking "Tell me about heart health," asking "Please provide 3 peer-reviewed articles published in the last five years about cardiovascular health," would likely provide a more productive output.

10.2 Regularly Evaluate and Update the Model

AI outputs — especially from LLMs, should be routinely evaluated to reduce hallucinations in LLMs and maintain veracity. Changing systems or new information necessitate updates to maintain accuracy. Evaluative methods of testing the outputs of AI involve an intersection of expert review and rigorous testing. Periodic audits conducted by expert subject matter experts will help ensure that the content outputs align with real-world veracity and contextual conventions. Stress tests can evaluate the performance of the AI on vague or edge-case prompts and can highlight areas that lead to hallucinations. Furthermore, continual updates of current data sets help the AI stay relevant and lessen the amount of outdated or inaccurate information that can affect its responses.

Constant updates also prevent the model from depending on outdated or irrelevant patterns that may lead to hallucinations.

10.3 User Education and Awareness

It is just as critical to educate users about the limitations of AI systems as it is to improve the AI technologies. When users gain an understanding of how an AI system works, they will be in a better position to interpret AI outputs seriously as well as identify hallucinations when they occur.

A good program covers how to interact with AI and how AI content verification protects users from fabricated outputs. Providing the user with specific guidelines on how to construct prompts to receive an accurate response, educating the user to question and validate the output from the AI tool, and supporting efforts to assist the user, such as real-time confidence indicators or feedback options will flag potential hallucinations. Effective user education and responsibilities can effectively lessen the potential risks of hallucinations and conduct safer AI use. Educated users act as a front-line defense against content generated that has hallucinations.

10.4 Human AI Partnership

Best practices also encompass another critical point: organizations should pair AI with human oversight and AI guardrails so outputs are reviewed before being trusted. While humans are good at working through the rapid speed of AI, when human experts work together with AI generated tasks, the collaborative output will be safer.

.jpg)

This partnership type, when potential hallucinations are considered, can also be bolstered by reviewing outputs using AI-based tasks, followed by a human expert. This type of quality review process can ensure the outputs are factually accurate. When you can co-develop a feedback model in conjunction with AI engineers and interdisciplinary experts relative to the domain area, the model can continue to provide updated and accurate outputs while being safe for use. The human–AI partnership, combined with best practices to reduce hallucinations, produces better, safer outputs while AI handles tedious work. Fundamentals between these two types of intelligence in this regard can be an effective means for knowledge production and dissemination while being a safe practice for the sharing of non-hallucinated or erroneous information.

11. Conclusion

AI hallucinations, or outputs created by AI systems that are fabricated, incorrect, misleading, or otherwise inaccurate, are one of the most serious issues for the development and deployment of AI systems. As AI technologies continue to be more complex and ubiquitous in daily life, the possibility of hallucinations to amplify misinformation, create uncertainty, or result in potential harm, increases. While there are certainly risks, these risks are manageable.

Through awareness of the causes, the manifestations, and real-world risks of hallucinations in AI, organizations can develop tools to detect hallucinations, establish ethical frameworks to govern mitigation of hallucinations, and mitigate any risk for AI hallucinations. AI guardrails may take the form of content filters, fine-tuning, human or machine oversight, and transparent organizational policies are critical guardrails to ensure responsible AI usage.

In addition to technology, human awareness and audits are essential to detect and reduce hallucinations in LLMs. To this end, humans should use clear prompts, frequent audits, and AI content verification to reduce risk from hallucinated outputs. An ecosystem of responsible AI use comprises technologies, intention, and broader awareness, for AI can support people in ways that require natural language semantics, with meaning and trust, without endangering safety.

To conclude, vigilance, awareness, AI guardrails, and ethical usage will remain required for managing AI hallucinations as these systems evolve. Organizations that take accountability for responsible AI use will be best prepared to engage with AI's potential and understand how to implement AI for beneficial outcomes and economically and socially viable.

If you want to stay updated on the latest trends, challenges and solutions in the world of AI, or learn how to harness it’s potential while avoiding common pitfalls, WizSumo is here to guide you. Our expert insights, detailed guide, and practical tips to confidently navigate the complexities of AI and build smarter, safer solutions.

AI doesn’t know truth from fiction — it predicts. Hallucinations aren’t intentional, but the risks of trusting them are very real

.svg)

.svg)

.png)

.png)